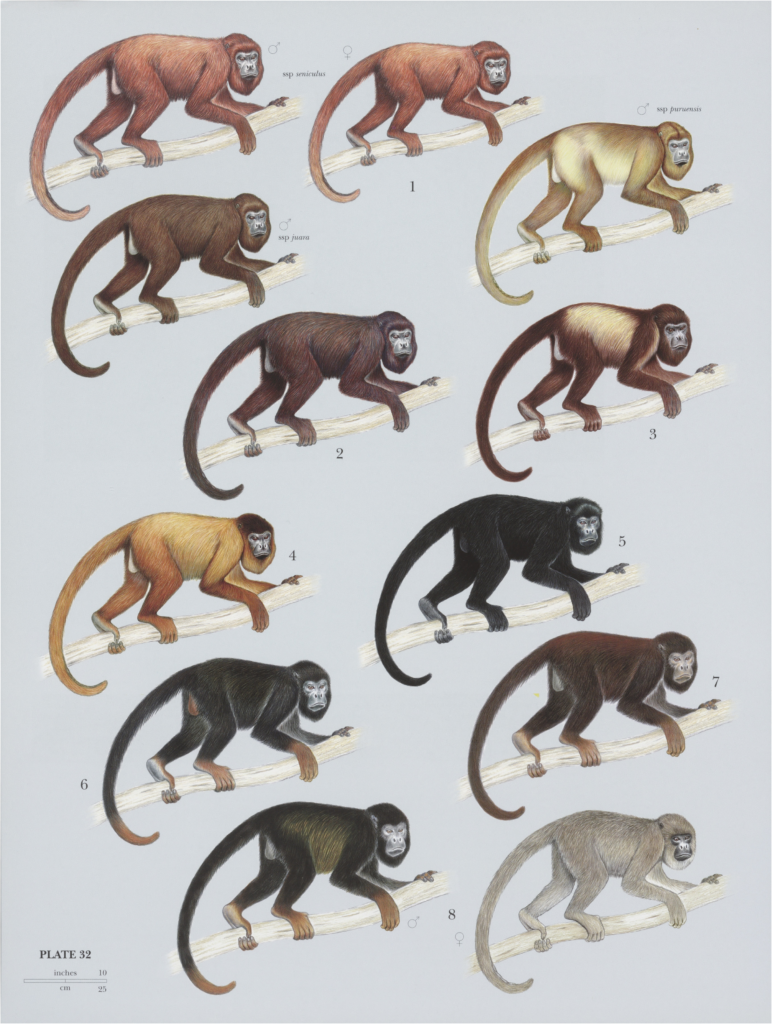

Here is the original picture:

Clik here to view.

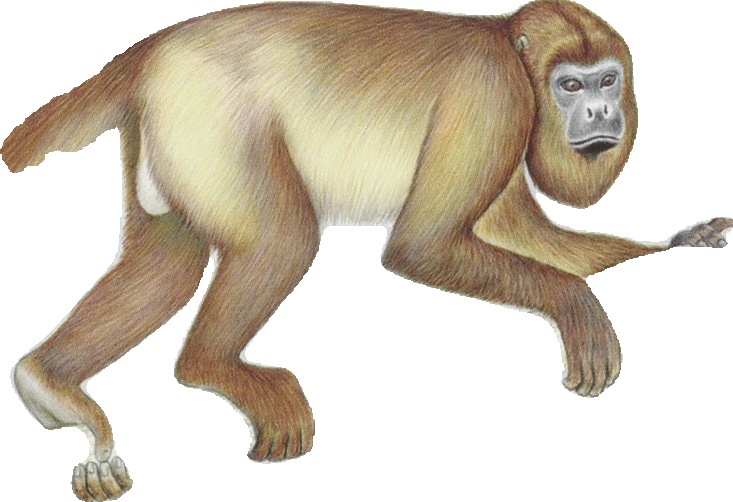

Months ago, I tested the segmentation of YOLOv8. The result is not very promising:

Clik here to view.

The tail of one monkey couldn’t be segmented correctly.

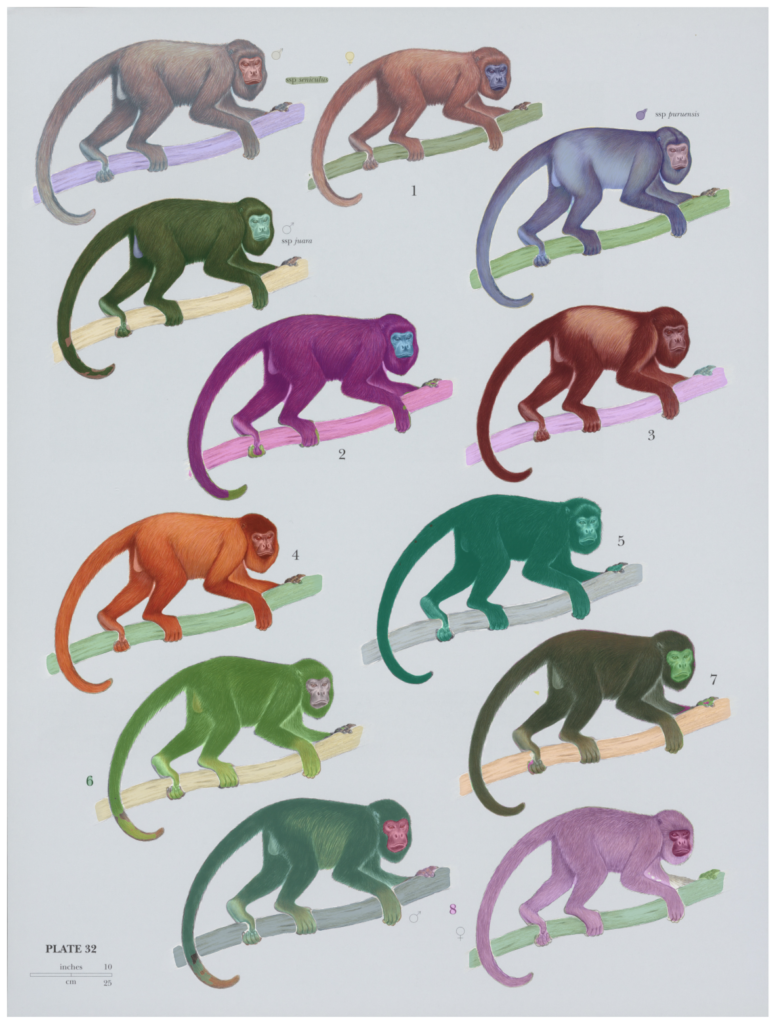

Today I tested the same picture with Meta’s Segment Anything Model (SAM). After using the simple Colab notebook and “vit_l” model type, the result shows better:

Clik here to view.

At least the yellow monkey’s tail has been all correctly segmented.

Note: For running the notebook with T4 GPU, you may need to set

points_per_batchlike:

SamAutomaticMaskGenerator(sam, points_per_batch=16)

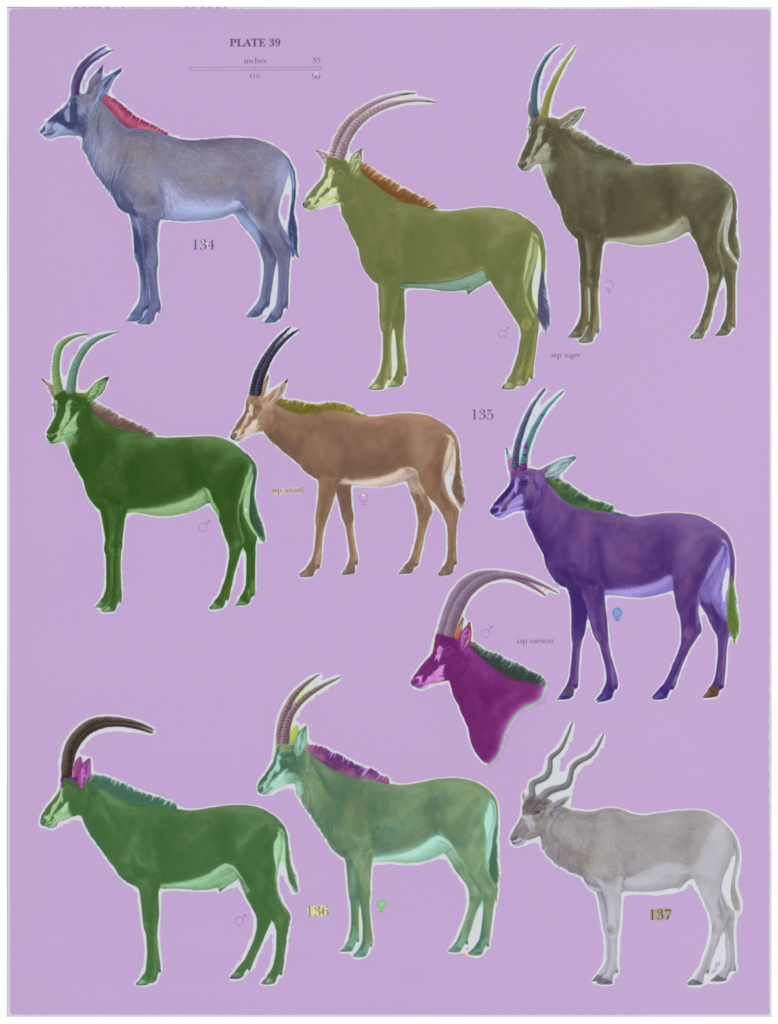

How about running with another picture? The goats:

Clik here to view.

Now, even the SAM couldn’t segment all the horns of goats correctly.